Authors:

(1) Timothy R. McIntosh;

(2) Teo Susnjak;

(3) Tong Liu;

(4) Paul Watters;

(5) Malka N. Halgamuge.

Table of Links

Background: Evolution of Generative AI

The Current Generative AI Research Taxonomy

Impact Analysis on Generative AI Research Taxonomy

Emergent Research Priorities in Generative AI

Practical Implications and Limitations of Generative AI Technologies

Impact of Generative AI on Preprints Across Disciplines

Conclusions, Disclaimer, and References

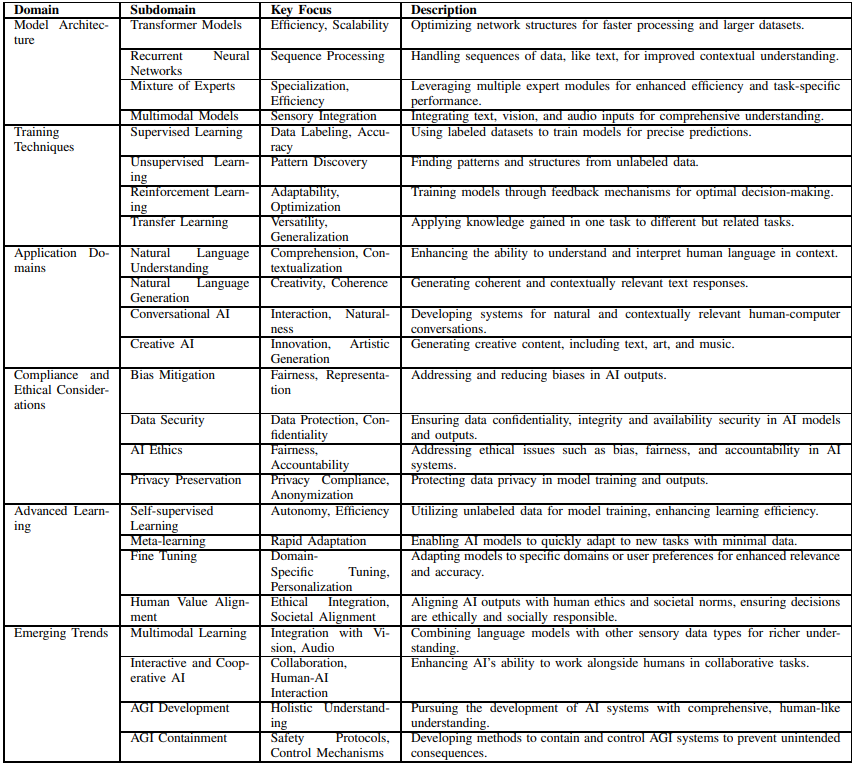

III. THE CURRENT GENERATIVE AI RESEARCH TAXONOMY

The field of Generative AI is evolving rapidly, which necessitates a comprehensive taxonomy that encompasses the breadth and depth of research within this domain. Detailed in Table I, this taxonomy categorizes the key areas of inquiry and innovation in generative AI, and serves as a foundational framework to understand the current state of the field, guiding through the complexities of evolving model architectures, advanced training methodologies, diverse application domains, ethical implications, and the frontiers of emerging technologies.

A. Model Architectures

Generative AI model architectures have seen significant developments, with four key domains standing out:

• Transformer Models: Transformer models have significantly revolutionized the field of AI, especially in NLP, due to their higher efficiency and scalability [139], [140], [141]. They employ advanced attention mechanisms to achieve enhanced contextual processing, allowing for more subtle understanding and interaction [142], [143], [144]. These models have also made notable strides in computer vision, as evidenced by the development of vision transformers like EfficientViT [145], [146] and YOLOv8 [147], [148], [149]. These innovations symbolize the extended capabilities of transformer models in areas such as object detection, offering not only improved performance but also increased computational efficiency.

• Recurrent Neural Networks (RNNs): RNNs excel in the realm of sequence modeling, making them particularly effective for tasks involving language and temporal data, as their architecture is specifically designed to process sequences of data, such as text, enabling them to capture the context and order of the input effectively [150], [151], [152], [153], [154]. This proficiency in handling sequential information renders them indispensable in applications that require a deep understanding of the temporal dynamics within data, such as natural language tasks and time-series analysis [155], [156]. RNNs’ ability to maintain a sense of continuity over sequences is a critical asset in the broader field of AI, especially in scenarios where context and historical data play crucial roles [157].

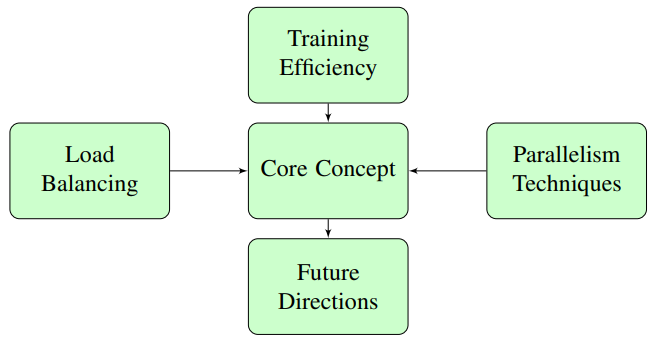

• Mixture of Experts (MoE): MoE models can significantly enhance efficiency by deploying model parallelism across multiple specialized expert modules, which enables these models to leverage transformer-based modules for dynamic token routing, and to scale to trillions of parameters, thereby reducing both memory footprint and computational costs [94], [98]. MoE models stand out for their ability to divide computational loads among various experts, each specializing in different aspects of the data, which allows for handling vast scales of parameters more effectively, leading to a more efficient and specialized handling of complex tasks [94], [21].

• Multimodal Models: Multimodal models, which integrate a variety of sensory inputs such as text, vision, and audio, are crucial in achieving a comprehensive understanding of complex data sets, particularly transformative in fields like medical imaging [113], [112], [115]. These models facilitate accurate and data-efficient analysis by employing multi-view pipelines and crossattention blocks [158], [159]. This integration of diverse sensory inputs allows for a more nuanced and detailed interpretation of data, enhancing the model’s ability to accurately analyze and understand various types of information [160]. The combination of different data types, processed concurrently, enables these models to provide a holistic view, making them especially effective in applications that require a deep and multifaceted understanding of complex scenarios [113], [161], [162], [160].

B. Training Techniques

The training of generative AI models leverages four key techniques, each contributing uniquely to the field:

• Supervised Learning: Supervised learning, a foundational approach in AI, uses labeled datasets to guide models towards accurate predictions, and it has been integral to various applications, including image recognition and NLP [163], [164], [165]. Recent advancements have focused on developing sophisticated loss functions and regularization techniques, aimed at enhancing the performance and generalization capabilities of supervised learning models, ensuring they remain robust and effective across a wide range of tasks and data types [166], [167], [168].

• Unsupervised Learning: Unsupervised learning is essential in AI for uncovering patterns within unlabeled data, a process central to tasks like feature learning and clustering [169], [170]. This method has seen significant advancements with the introduction of autoencoders [171], [172] and Generative Adversarial Networks (GANs) [173], [174], [175], which have notably expanded unsupervised learning’s applicability, enabling more sophisticated data generation and representation learning capabilities. Such innovations are crucial for understanding and leveraging the complex structures often inherent in unstructured datasets, highlighting the growing versatility and depth of unsupervised learning techniques.

• Reinforcement Learning: Reinforcement learning, characterized by its adaptability and optimization capabilities, has become increasingly vital in decision-making and autonomous systems [176], [177]. This training technique has undergone significant advancements, particularly with the development of Deep Q-Networks (DQN) [178], [179], [180] and Proximal Policy Optimization (PPO) algorithms [181], [182], [183]. These enhancements have been crucial in improving the efficacy and applicability of reinforcement learning, especially in complex and dynamic environments. By optimizing decisions and policies through interactive feedback loops, reinforcement learning has established itself as a crucial tool for training AI systems in scenarios that demand a high degree of adaptability and precision in decision-making [184], [185].

• Transfer Learning: Transfer learning emphasizes versatility and efficiency in AI training, allowing models to apply knowledge acquired from one task to different yet related tasks, which significantly reduces the need for large labeled datasets [186], [187]. Transfer learning, through the use of pre-trained networks, streamlines the training process by allowing models to be efficiently fine-tuned for specific applications, thereby enhancing adaptability and performance across diverse tasks, and proving particularly beneficial in scenarios where acquiring extensive labeled data is impractical or unfeasible [188], [189].

C. Application Domains

The application domains of Generative AI are remarkably diverse and evolving, encompassing both established and emerging areas of research and application. These domains have been significantly influenced by recent advancements in AI technology and the expanding scope of AI applications.

• Natural Language Understanding (NLU): NLU is central to enhancing the comprehension and contextualization of human language in AI systems, and involves key capabilities such as semantic analysis, named entity recognition, sentiment analysis, textual entailment, and machine reading comprehension [190], [191], [192], [193]. Advances in NLU have been crucial in improving AI’s proficiency in interpreting and analyzing language across a spectrum of contexts, ranging from straightforward conversational exchanges to intricate textual data [190], [192], [193]. NLU is fundamental in applications like sentiment analysis, language translation, information extraction, and more [194], [195], [196]. Recent advancements have prominently featured large transformer-based models like BERT and GPT-3, which have significantly advanced the field by enabling a deeper and more complex understanding of language subtleties [197], [198].

• Natural Language Generation (NLG): NLG emphasizes the training of models to generate coherent, contextually-relevant, and creative text responses, a critical component in chatbots, virtual assistants, and automated content creation tools [199], [36], [200], [201]. NLG encompasses challenges such as topic modeling, discourse planning, concept-to-text generation, style transfer, and controllable text generation [36], [202]. The recent surge in NLG capabilities, exemplified by advanced models like GPT-3, has significantly enhanced the sophistication and nuance of text generation, which enable AI systems to produce text that closely mirrors human writing styles, thereby broadening the scope and applicability of NLG in various interactive and creative contexts [203], [55], [51].

• Conversational AI: This subdomain is dedicated to developing AI systems capable of smooth, natural, and context-aware human-computer interactions, by focusing on dialogue modeling, question answering, user intent recognition, and multi-turn context tracking [204], [205], [206], [207]. In finance and cybersecurity, AI’s predictive analytics have transformed risk assessment and fraud detection, leading to more secure and efficient operations [205], [19]. The advancements in this area, demonstrated by large pre-trained models like Meena[7] and BlenderBot[8], have significantly enhanced the empathetic and responsive capabilities of AI interactions. These systems not only improve user engagement and satisfaction, but also maintain the flow of conversation over multiple turns, providing coherent, contextually relevant, and engaging experiences [208], [209].

• Creative AI: This emerging subdomain spans across text, art, music, and more, pushing the boundaries of AI’s creative and innovative potential across various modalities including images, audio, and video, by engaging in the generation of artistic content, encompassing applications in idea generation, storytelling, poetry, music composition, visual arts, and creative writing, and has resulted in commercial success like MidJourney and DALL-E [210], [211], [212]. The challenges in this field involve finding suitable data representations, algorithms, and evaluation metrics to effectively assess and foster creativity [212], [213]. Creative AI serves not only as a tool for automating and enhancing artistic processes, but also as a medium for exploring new forms of artistic expression, enabling the creation of novel and diverse creative outputs [212]. This domain represents a significant leap in AI’s capability to engage in and contribute to creative endeavors, redefining the intersection of technology and art.

D. Compliance and Ethical Considerations

As AI technologies rapidly evolve and become more integrated into various sectors, ethical considerations and legal compliance have become increasingly crucial, which requires a focus on developing ‘Ethical AI Frameworks’, a new category in our taxonomy reflecting the trend towards responsible AI development in generative AI [214], [215], [15], [216], [217]. Such frameworks are crucial in ensuring AI systems are built with a core emphasis on ethical considerations, fairness, and transparency, as they address critical aspects such as bias mitigation for fairness, privacy and security concerns for data protection, and AI ethics for accountability, thus responding to the evolving landscape where accountability in AI is of paramount importance [214], [15]. The need for rigorous approaches to uphold ethical integrity and legal conformity has never been more pressing, reflecting the complexity and multifaceted challenges introduced by the adoption of these technologies [15].

• Bias Mitigation: Bias Mitigation in AI systems is a critical endeavor to ensure fairness and representation, which involves not only balanced data collection to avoid skewed perspectives but also involves implementing algorithmic adjustments and regularization techniques to minimize biases [218], [219]. Continuous monitoring and bias testing are essential to identify and address any biases that may emerge from AI’s predictive patterns [220], [219]. A significant challenge in this area is dealing with intersectional biases [221], [222], [223] and understanding the causal interactions that may contribute to these biases [224], [225], [226], [227].

• Data Security: In AI data security, key requirements and challenges include ensuring data confidentiality, adhering to consent norms, and safeguarding against vulnerabilities like membership inference attacks [228], [229]. Compliance with stringent legal standards within applicable jurisdictions, such as the General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA), is essential, necessitating purpose limitation and data minimization [230], [231], [232]. Additionally, issues of data sovereignty and copyright emphasize the need for robust encryption, access control, and continuous security assessments [233], [234]. These efforts are critical for maintaining the integrity of AI systems and protecting user privacy in an evolving digital landscape.

• AI Ethics: The field of AI ethics focuses on fairness, accountability, and societal impact, addresses the surge in ethical challenges posed by AI’s increasing complexity and potential misalignment with human values, and requires ethical governance frameworks, multidisciplinary collaborations, and technological solutions [214], [235], [15], [236]. Furthermore, AI Ethics involves ensuring traceability, auditability, and transparency throughout the model development lifecycle, employing practices such as algorithmic auditing, establishing ethics boards, and adhering to documentation standards and model cards [237], [236]. However, the adoption of these initiatives remains uneven, highlighting the ongoing need for comprehensive and consistent ethical practices in AI development and deployment [214].

• Privacy Preservation: This domain focuses on maintaining data confidentiality and integrity, employing strategies like anonymization and federated learning to minimize direct data exposure, especially when the rise of generative AI poses risks of user profiling [238], [239]. Despite these efforts, challenges such as achieving true anonymity against correlation attacks highlight the complexities in effectively protecting against intrusive surveillance [240], [241]. Ensuring compliance with privacy laws and implementing secure data handling practices are crucial in this context, demonstrating the continuous need for robust privacy preservation mechanisms.

E. Advanced Learning

Advanced learning techniques, including self-supervised learning, meta-learning, and fine-tuning, are at the forefront of AI research, enhancing the autonomy, efficiency, and versatility of AI models.

• Self-supervised Learning: This method emphasizes autonomous model training using unlabeled data, reducing manual labeling efforts and model biases [242], [165], [243]. It incorporates generative models like autoencoders and GANs for data distribution learning and original input reconstruction [244], [245], [246], and also includes contrastive methods such as SimCLR [247] and MoCo [248], designed to differentiate between positive and negative sample pairs. Further, it employs self-prediction strategies, inspired by NLP, using techniques like masking for input reconstruction, significantly enhanced by recent Vision Transformers developments [249], [250], [165]. This integration of varied methods highlights selfsupervised learning’s role in advancing AI’s autonomous training capabilities.

• Meta-learning: Meta-learning, or ‘learning to learn’, centers on equipping AI models with the ability to rapidly adapt to new tasks and domains using limited data samples [251], [252]. This technique involves mastering the optimization process and is critical in situations with limited data availability, to ensure models can quickly adapt and perform across diverse tasks, essential in the current data-driven landscape [253], [254]. It focuses on few-shot generalization, enabling AI to handle a wide range of tasks with minimal data, underlining its importance in developing versatile and adaptable AI systems [255], [256], [254], [257].

• Fine Tuning: Involves customizing pre-trained models to specific domains or user preferences, enhancing accuracy and relevance for niche applications [60], [258], [259]. Its two primary approaches are end-to-end fine-tuning, which adjusts all weights of the encoder and classifier [260], [261], and feature-extraction fine-tuning, where the encoder weights are frozen to extract features for a downstream classifier [262], [263], [264]. This technique ensures that generative models are more effectively adapted to specific user needs or domain requirements, making them more versatile and applicable across various contexts.

• Human Value Alignment: This emerging aspect concentrates on harmonizing AI models with human ethics and values to ensure that their decisions and actions mirror societal norms and ethical standards, involving the integration of ethical decision-making processes and the adaptation of AI outputs to conform with human moral values [265], [89], [266]. This is increasingly important in scenarios where AI interacts closely with humans, such as in healthcare, finance, and personal assistants, to ensure that AI systems make decisions that are not only technically sound, but also ethically and socially responsible, which means human value alignment is becoming crucial in developing AI systems that are trusted and accepted by society [89], [267].

F. Emerging Trends

Emerging trends in generative AI research are shaping the future of technology and human interaction, and they indicate a dynamic shift towards more integrated, interactive, and intelligent AI systems, driving forward the boundaries of what is possible in the realm of AI. Key developments in this area include:

• Multimodal Learning: Multimodal Learning in AI, a rapidly evolving subdomain, focuses on combining language understanding with computer vision and audio processing to achieve a richer, multi-sensory context awareness [114], [268]. Recent developments like Gemini model have set new benchmarks by demonstrating state-of-the-art performance in various multimodal tasks, including natural image, audio, and video understanding, and mathematical reasoning [112]. Gemini’s inherently multimodal design exemplifies the seamless integration and operation across different information types [112]. Despite the advancements, the field of multimodal learning still confronts ongoing challenges, such as refining the architectures to handle diverse data types more effectively [269], [270], developing comprehensive datasets that accurately represent multifaceted information [269], [271], and establishing benchmarks for evaluating the performance of these complex systems [272], [273].

• Interactive and Cooperative AI: This subdomain aims to enhance the capabilities of AI models to collaborate effectively with humans in complex tasks [274], [35]. This trend focuses on developing AI systems that can work alongside humans, thereby improving user experience and efficiency across various applications, including productivity and healthcare [275], [276], [277]. Core aspects of this subdomain involve advancing AI in areas such as explainability [278], understanding human intentions and behavior (theory of mind) [279], [280], and scalable coordination between AI systems and humans, a collaborative approach crucial in creating more intuitive and interactive AI systems, capable of assisting and augmenting human capabilities in diverse contexts [281], [35].

• AGI Development: AGI, representing the visionary goal of crafting AI systems that emulate the comprehensive and multifaceted aspects of human cognition, is a subdomain focused on developing AI with the capability for holistic understanding and complex reasoning that closely aligns with the depth and breadth of human cognitive abilities [282], [283], [32]. AGI is not just about replicating human intelligence, but also involves crafting systems that can autonomously perform a variety of tasks, demonstrating adaptability and learning capabilities akin to those of humans [282], [283]. The pursuit of AGI is a long-term aspiration, continually pushing the boundaries of AI research and development.

• AGI Containment: AGI Safety and Containment acknowledges the potential risks associated with highly advanced AI systems, focused on ensuring that these advanced systems are not only technically proficient but also ethically aligned with human values and societal norms [15], [32], [11]. As we progress towards developing superintelligent systems, it becomes crucial to establish rigorous safety protocols and control mechanisms [11]. Key areas of concern include mitigating representational biases, addressing distribution shifts, and correcting spurious correlations within AI models [11], [284]. The objective is to prevent unintended societal consequences by aligning AI development with responsible and ethical standards.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.

[7] https://neptune.ai/blog/transformer-nlp-models-meena-lamda-chatbots

[8] https://blenderbot.ai