Authors:

(1) Timothy R. McIntosh;

(2) Teo Susnjak;

(3) Tong Liu;

(4) Paul Watters;

(5) Malka N. Halgamuge.

Table of Links

Background: Evolution of Generative AI

The Current Generative AI Research Taxonomy

Impact Analysis on Generative AI Research Taxonomy

Emergent Research Priorities in Generative AI

Practical Implications and Limitations of Generative AI Technologies

Impact of Generative AI on Preprints Across Disciplines

Conclusions, Disclaimer, and References

X. IMPACT OF GENERATIVE AI ON PREPRINTS ACROSS DISCIPLINES

The challenges detailed in this section are not directly related to the knowledge domains within generative AI, but are fueled by the success of Generative AI, particularly the commercialization of ChatGPT. The proliferation of preprints in the field of AI (Fig. 7), especially in the cs.AI category on platforms like arXiv, has introduced a set of academic challenges that merit careful consideration and strategic response. The rapid commercialization and adoption of tools such as ChatGPT, as evidenced by over 55,700 entries on Google Scholar mentioning “ChatGPT” within just one year of its commercialization, exemplify the accelerated pace at which the field is advancing. This rapid development is not mirrored in the traditional peer-review process, which is considerably slower. The peer-review process now appears to be overwhelmed with manuscripts that are either generated with ChatGPT (or other LLMs), or whose writing processes have been significantly accelerated by such LLMs, contributing to a bottleneck in scholarly communication [325], [326]. This situation is further compounded by the fact that many journals in disciplines outside of computer science are also experiencing longer review times and higher rates of desk rejections. Additionally, the flourishing trend of manuscripts and preprints, either generated by or significantly expedited using tools like ChatGPT, extends beyond computer science into diverse academic disciplines. This trend presents a looming challenge, potentially overwhelming both the traditional peer review process and the flourishing preprint ecosystem with a volume of work that may not always adhere to established academic standards.

The sheer volume of preprints has made the task of selecting and scrutinizing research exceedingly demanding. In the current research era, the exploration of scientific literature has become increasingly complex, as knowledge has continued to expand and disseminate exponentially, while concurrently, integrative research efforts attempting to distill these vast literature, attempt to identify and understand a smaller sets of core contributions [327]. Thus, the rapid expansion of academic literature across various fields presents a significant challenge for researchers seeking to perform evidence syntheses over the increasingly vast body of available knowledge [328]. Furthermore, this explosion in publication volume poses a distinct challenge for literature reviews and surveys, where the human capacity for manually selecting, understanding, and critically evaluating articles is increasingly strained, potentially leading to gaps in synthesizing comprehensive knowledge landscapes. Although reproduction of results is a theoretical possibility, practical constraints such as the lack of technical expertise, computational resources, or access to proprietary datasets hinder rigorous evaluation. This is concerning, as the inability to thoroughly assess preprint research undermines the foundation of scientific reliability and validity. Furthermore, the peerreview system, a cornerstone of academic rigour, is under the threat of being further overwhelmed [325], [329]. The potential consequences are significant, with unvetted preprints possibly perpetuating biases or errors within the scientific community and beyond. The absence of established retraction mechanisms for preprints, akin to those for published articles, exacerbates the risk of persistent dissemination of flawed research.

The academic community is at a crossroads, necessitating an urgent and thoughtful discourse on navigating this emerging “mess” — a situation that risks spiraling out of control if left unaddressed. In this context, the role of peer review becomes increasingly crucial, as it serves as a critical checkpoint for quality and validity, ensuring that the rapid production of AI research is rigorously studied for scientific accuracy and relevance. However, the current modus operandi of traditional peer review does not appear to be sustainable, primarily due to its inability to keep pace with the exponential growth in AI-themed research and Generative-AI-accelerated research submissions, and the increasingly specialized nature of emerging AI topics [325], [326]. This situation is compounded by a finite pool of qualified reviewers, leading to delays, potential biases, and a burden on the scholarly community. This reality demands an exploration of new paradigms for peer review and dissemination of research that can keep pace with swift advancements in AI. Innovative models for community-driven vetting processes, enhanced reproducibility checks, and dynamic frameworks for post-publication scrutiny and correction may be necessary. Efforts to incorporate automated tools and AI-assisted review processes could also be explored to alleviate the strain on human reviewers.

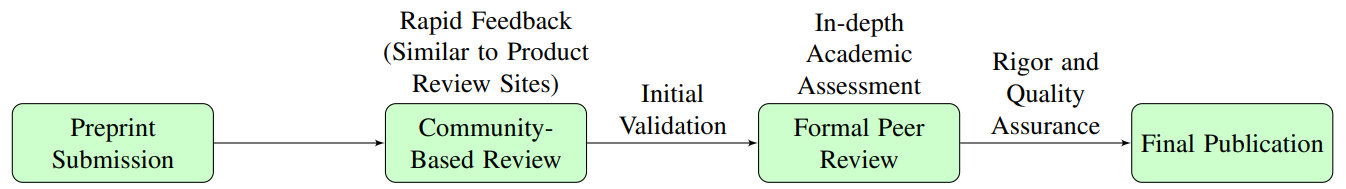

In this rapidly evolving landscape, envision a convergence between the traditional peer review system and the flourishing preprint ecosystem, which could involve creating hybrid models (Fig. 8), where preprints undergo a preliminary community-based review, harnessing the collective expertise and rapid feedback of the academic community, similar to product review websites and Twitter [330]. This approach could provide an initial layer of validation, offering additional insights on issues that may be overlooked by a limited number of peer reviewers. The Editors-in-Chief (EICs) could consider the major criticisms and suggestions of an article from the community-based review, ensuring a more

thorough and diverse evaluation. Subsequent, more formal peer review processes could then refine and endorse these preprints for academic rigor and quality assurance. This hybrid model would require robust technological support, possibly leveraging AI and machine learning tools to assist in initial screening and identification of suitable reviewers. The aim would be to establish a seamless continuum from rapid dissemination to validated publication, ensuring both the speed of preprints and the credibility of peer-reviewed research. A balanced approach must be struck to harness the benefits of preprints—such as rapid dissemination of findings and open access—while mitigating their drawbacks. The development of new infrastructure and norms could be instrumental in steering the academic community towards a sustainable model that upholds the integrity and trustworthiness of scientific research in the age of Generative AI.

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.